Semantic Folding for Sensor Fusion

Real-Time, Low-Latency Sensor Fusion for Intelligent Systems

Semantic Folding enables high-resolution, low-latency processing of sensor fusion data by converting raw sensor inputs into structured semantic representations. This approach supports the development of intelligent systems that require robust, context-aware perception and control – without the compute and data burdens of conventional AI models.

What is Sensor Fusion?

Sensor fusion refers to the process of integrating data from multiple heterogeneous sensors to derive a coherent, accurate, and real-time understanding of a system’s internal state or its operating environment. It’s a foundational capability for intelligent operations across sectors like manufacturing, aerospace, automotive, utilities, and energy.

While conventional fusion algorithms typically rely on statistical models, rule-based logic, or deep learning frameworks, they face recurring issues in scalability, generalization, and adaptability – especially in noisy or dynamic real-world conditions.

What is Semantic Folding?

Originally developed for Natural Language Processing (NLP), Semantic Folding creates high-dimensional, sparse binary vectors (semantic fingerprints) that encode the meaning of data by mapping it into a structured topological space. In NLP, this technique groups semantically similar words or concepts together spatially, enabling fast, unsupervised similarity comparisons.

This same principle can be extended to numerical sensor data, allowing sensor values to be encoded into semantic fingerprints based on the operational context in which they occur. The result is a compact, meaningful representation of system state that supports real-time, context-aware inference and decision-making.

How does Semantic Folding for Sensor Fusion work?

- Creation of a Semantic Space

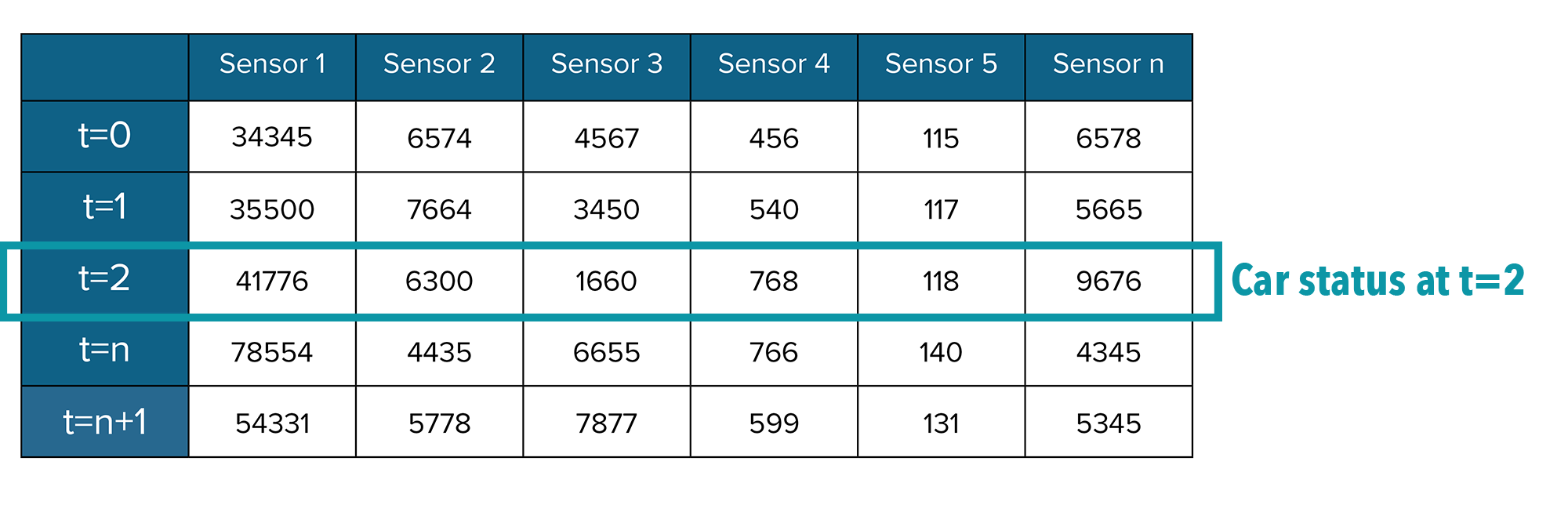

The first step is to create a semantic space based on a reference collection representing the use case. For automobile sensor fusion, for example, a data stream from the car’s sensors capturing all anticipated driving conditions and environments is used as training material. A set of concurrent sensor values is recorded every second and stored in a time-series training file.

Each set of sensor values represents a car status at a given moment. We describe this set as a “context”, analogous to a sentence in textual data, where each sensor value is a “word”.

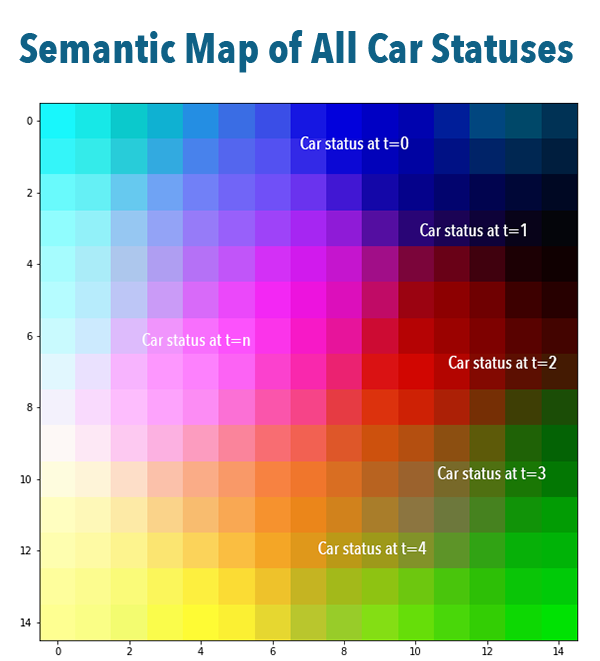

The system organizes these contexts into a high-dimensional metric space such that similar contexts are placed near each other and dissimilar ones are far apart. This forms the basis of the semantic map – capturing system behavior across time and conditions without needing labels.

- Fingerprint Generation

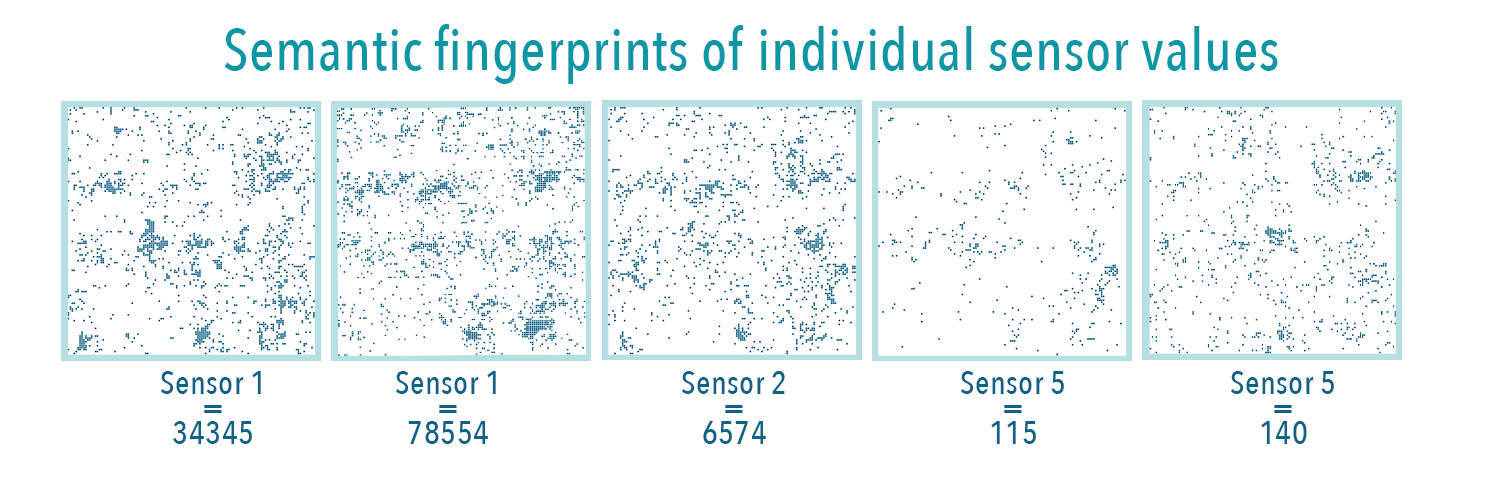

From this map, the system derives a semantic fingerprint for each individual sensor value. A fingerprint is a sparse binary vector marking all contexts in which the value appears. Together, these fingerprints form a sensor dictionary used for real-time encoding and interpretation.

- Real-Time Inference

During operation, new sensor readings are transformed into semantic fingerprints and compared to known patterns using fast bitwise overlap. This enables anomaly detection, classification, and state estimation in real time, with high accuracy and minimal compute.

How Semantic Folding Solves Key Sensor Fusion Challenges

Challenge | Conventional Limitation | Semantic Folding Solution |

| Incompatible sensor modalities | Hard to normalize and fuse different units, scales, and formats | Encodes all sensor types into a unified semantic space based on context |

| Lack of contextual understanding | Traditional fusion methods operate on raw values or engineered features | Context-based encoding captures relationships between values and system behavior |

| Need for large labeled datasets | Supervised learning methods require extensive annotation and retraining | Fully unsupervised learning from unlabeled multivariate time series |

| High computational overhead | Deep models and rule-based systems demand extensive compute and tuning | Ultra-lightweight semantic fingerprints enable low-latency inference with minimal resources |

| Latency and bandwidth constraints | Aggregated sensor streams increase transmission and processing delay | Local semantic inference enables edge deployment and real-time responsiveness |

| Fragile fault handling | Error in one sensor often disrupts inference | Contextual encoding enables high fault and noise tolerance |