Proof-of-Concept:

How Semantic Folding Enhances Early Warning and Anomaly Detection in Aviation Safety

Goal

Develop a predictive model capable of classifying object types and anomalies based on radar signature data from air traffic control systems.

Potential Aviation Use Cases

Airspace Safety & Intrusion Detection

- Detect and classify object types and anomalies (e.g., unauthorized flight paths, lost transponders).

- Identify potential hazards such as birds near runways or in approach/departure corridors in real time.

- Impact: Enhances situational awareness and supports rapid incident response for air traffic controllers and airport security teams.

Unmanned Aerial Vehicle / Drone Activity Monitoring

- Detect low-RCS (Radar Cross Section), slow-moving or hovering drones.

- Classify UAV types and recognize swarming behavior based on radar signature patterns.

- Impact: Supports enforcement of UAV restrictions, improves passenger safety, and prevents runway disruption.

Challenges

- Radar signals are represented by complex numbers, capturing magnitude and phase information, making it very difficult to process and interpret.

- Traditional methods struggle to detect anomalies not seen during training and fail to generalize across new object types and behaviors.

- Conventional ML models require large volumes of labelled data—difficult to gather for rare events—making them difficult to scale and adapt in real-world environments.

- Noise, clutter and radar intereference degrade signal clarity and reduce detection accuracy.

- Multiple simultaneous radar streams introduce latency and bandwidth constraints.

Solution

- For this POC, 8,766 radar signal records from civil airport surveillance were used to train a radar semantic space using a fully unsupervised training process.

- Each incoming radar signature is converted into a semantic fingerprint, capturing the meaning (behavioral context) of the signal.

- These fingerprints are aggregated and sparsified to create a distinct classifier fingerprint for each radar feature or object class (e.g., aircraft type, drones).

- In live operation, incoming radar signatures are converted into semantic fingerprints in real time and compared against class fingerprints stored in a reference database.

- Classification is achieved through a simple bitwise overlap calculation, measuring the number of shared bits between the input and each class fingerprint.

Results

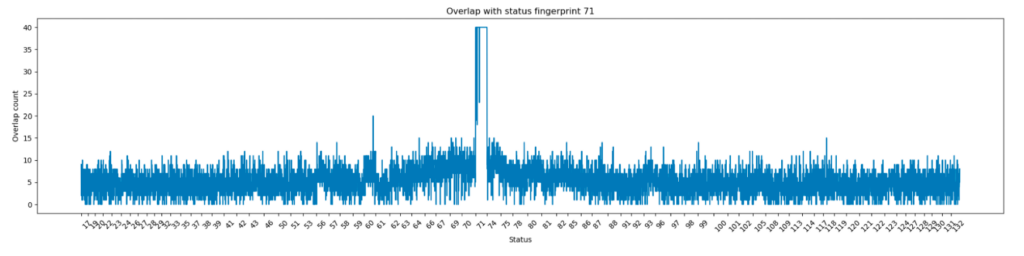

- The semantic overlap between a radar signal’s fingerprint and each class fingerprint can be visualized on a two-axis chart:

- X-axis: Object classes (e.g., aircraft type, drone, bird)

- Y-axis: Overlap score (degree of semantic similiarity based on bit count)

- Key Insight: There is a high degree of similarity between consecutive radar signal fingerprints, indicating strong internal coherence. This means Semantic Folding enables the early detection of subtle, gradually emerging anomalies – often before traditional systems can register a deviation or trigger a respond.

Conclusion

This proof of concept demonstrates that Semantic Folding can significantly enhance situational awareness, safety, and operational efficiency in airport surveillance systems.

Cortical.io’s approach is effective across both civil and defense airspace applications, making it a versatile and scalable solution for modern airspace management.

The Cortical.io Difference

- Instant anomaly detection: Identifies semantically unfamiliar patterns in real time, even if they were never encountered during training.

- Adapts to dynamic environments: Semantic fingerprints automatically adjust to changing conditions without manual tuning.

- Generalizes across missions: Detects object classes across multiple use cases with minimal retraining.

- Resilient to noise and clutter: Maintains high performance in degraded or jammed environments.

- No manual feature engineering: Learns meaningful patterns autonomously – accelerating deployment.